|

The Stanford Multiview |

We're happy to introduce two new multi-view light field datasets. The datsets were captured with hand-held Lytro Illum cameras, and unlike previous datasets each scene is photographed from a diversity of camera poses.

This data emulates the case of a mobile platform employing a lenslet-based light field camera, or multiple light field cameras operating simultaneously. The individual light fields have small baselines, on the order of a centimeter, while the camera poses vary over a broader baseline, on the order of a meter or more.

These are the first such datasets to our knowledge, and it is our hope that they will enable research into multi-view light field processing including registration, self-calibration, structure-from-motion, interpolation, and feature extraction.

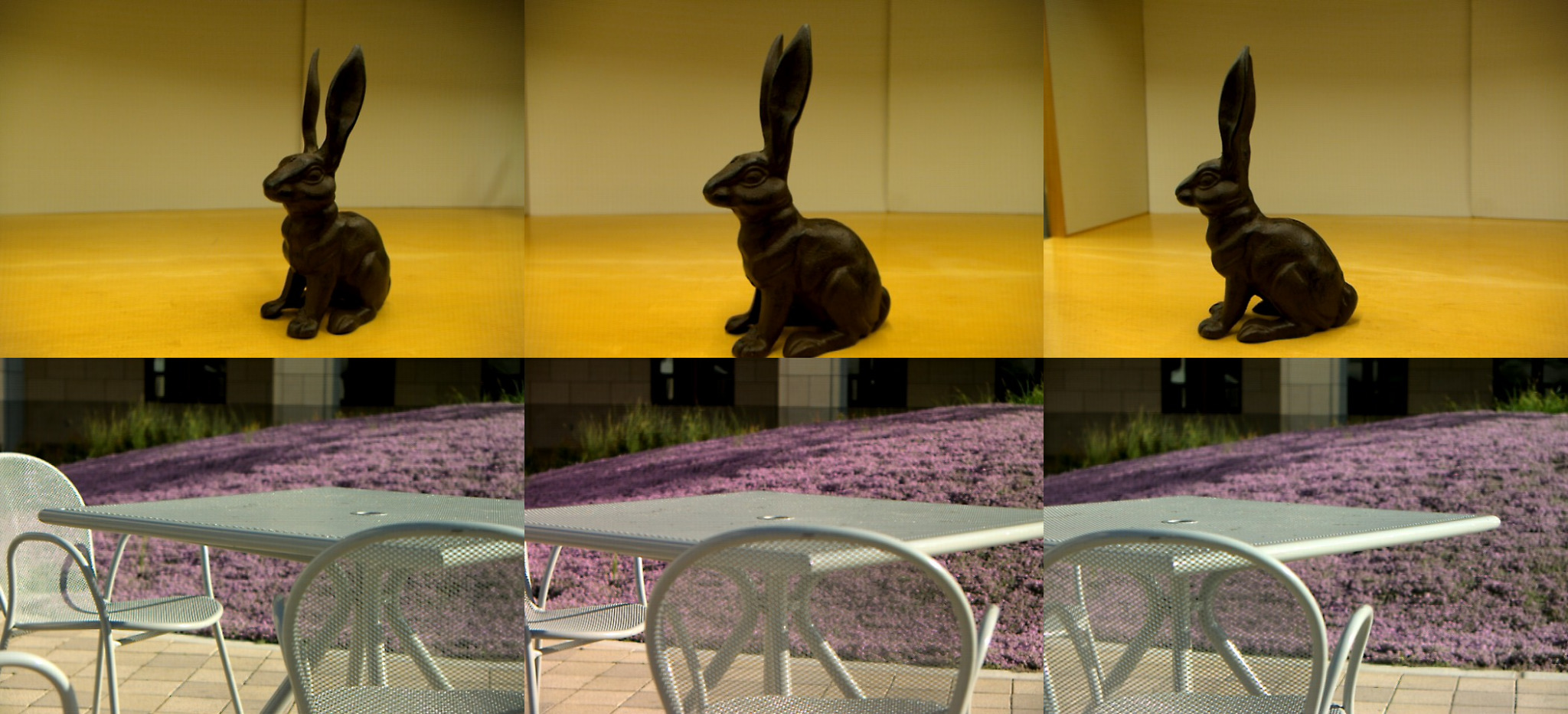

Scenes include examples of Lambertian and non-Lambertian surfaces, occlusion, specularity, subsurface scattering, fine detail, and transparency.

Example code for loading ESLF files is included in the LiFF light field features toolbox here. For more information on the file formats in these datasets please see the supplemental information here.

D. G. Dansereau, B. Girod, and G. Wetzstein, “LiFF: Light field features in scale and depth,” in Computer Vision and Pattern Recognition (CVPR), 2019. Available here, supplementary material here.

@inproceedings{dansereau2019liff,

author = {Donald G. Dansereau and Bernd Girod and Gordon Wetzstein},

title = {{LiFF}: Light Field Features in Scale and Depth},

booktitle = {Computer Vision and Pattern Recognition ({CVPR})},

year = {2019},

month = jun,

organization={IEEE},

URL = {http://dgd.vision/Papers/dansereau2019liff.pdf}

}

This dataset was collected with a single hand-held Lytro camera. Focus, zoom, and exposure settings vary by image.

The dataset contains 4211 light fields organised into 30 categories. Each scene is captured from 3-5 camera poses, over a total of 850 scenes. A set of 'clusters' files identify which groups of images belong in each scene.

Each image is available as a raw Lytro LFR file or a decoded ESLF file. Each is accompanied by a rendered extended-depth-of-field 2D thumbnail, Lytro-generated depth map, and metadata file. Lytro camera calibration and lists of image clusters by scene are also provided.

For this dataset three Lytro Illum cameras were rigidly mounted together and their focus and zoom settings fixed. There are 3042 images in total: 421 x 3 indoor, 543 x 3 outdoor, and 75 checkerboard calibration images for each indoor and outdoor. Different geometries and settings were used for the indoor and outdoor scenes.

Indoor scenes: the two outer camreas were toed in for better coverage at close range. The geometry of the cameras as well as their zoom and focus settings were fixed. Exposure time and gain were allowed to vary per image.

Outdoor scenes: the cameras were mounted with approximately parallel principal axes. The geometry of the cameras as well as their zoom and focus settings were fixed, but with different values from the indoor scenes. Exposure time and gain were allowed to vary per image.

Checkerboard images: for calibration purposes, sets of checkerboard images are provided for both the indoor and outdoor configurations.

Some of the imagery covers the same scene multiple times. i.e. the three-camera rig was translated around a point of interest, or panned through an overlapping set of images, or for the indoor scene elements of the scene translated through different poses.

All images are in Lytro LFR format, accompanied by thumbnails. Note that the depth of field of the thumbnails is not indicative of the true depth of field of the light fields, this is the thumbnail stored by the Lytro camera and includes a virtual depth of field effect.

| File | Size | Description |

|---|---|---|

| caldata-B5143104560.tar | 1.7G | Lytro Illum calibration data |

| caldata-B5144101330.tar | 1.7G | Lytro Illum calibration data |

| caldata-B5152104290.tar | 1.7G | Lytro Illum calibration data |

| RenderRecipe_FullDOF.json | 4.7k | Recipe used with the Lytro software to render the extended-depth-of-field thumbnails in mvlf_eslf.tar |

| 3vlf_lfr.tar | 150G | The three-view light field dataset, indoor and outdoor |

| mvlf_eslf.tar | 207G | Multi-view dataset in ESLF format with accompanying depth maps and 2D thumbnails |

| mvlf_lfr.tar | 218G | Multi-view dataset in LFR format only |

| mvlf_clusters.zip | 14k | Lists of images clustered per scene, with one file of clusters for each image category |

| mvlf_clusters.v2.zip | 14k | Cluster list with corrections, Jan 2019 |

The release folder is accessible at http://lightfields.stanford.edu/mvlf/release/